A look at content and programming.

Editor’s Note: Part 1 of this article, published in the February issue of Sound & Communications, covered technologies and hardware for video-analytics systems. Part 2 covers content creation and programming.

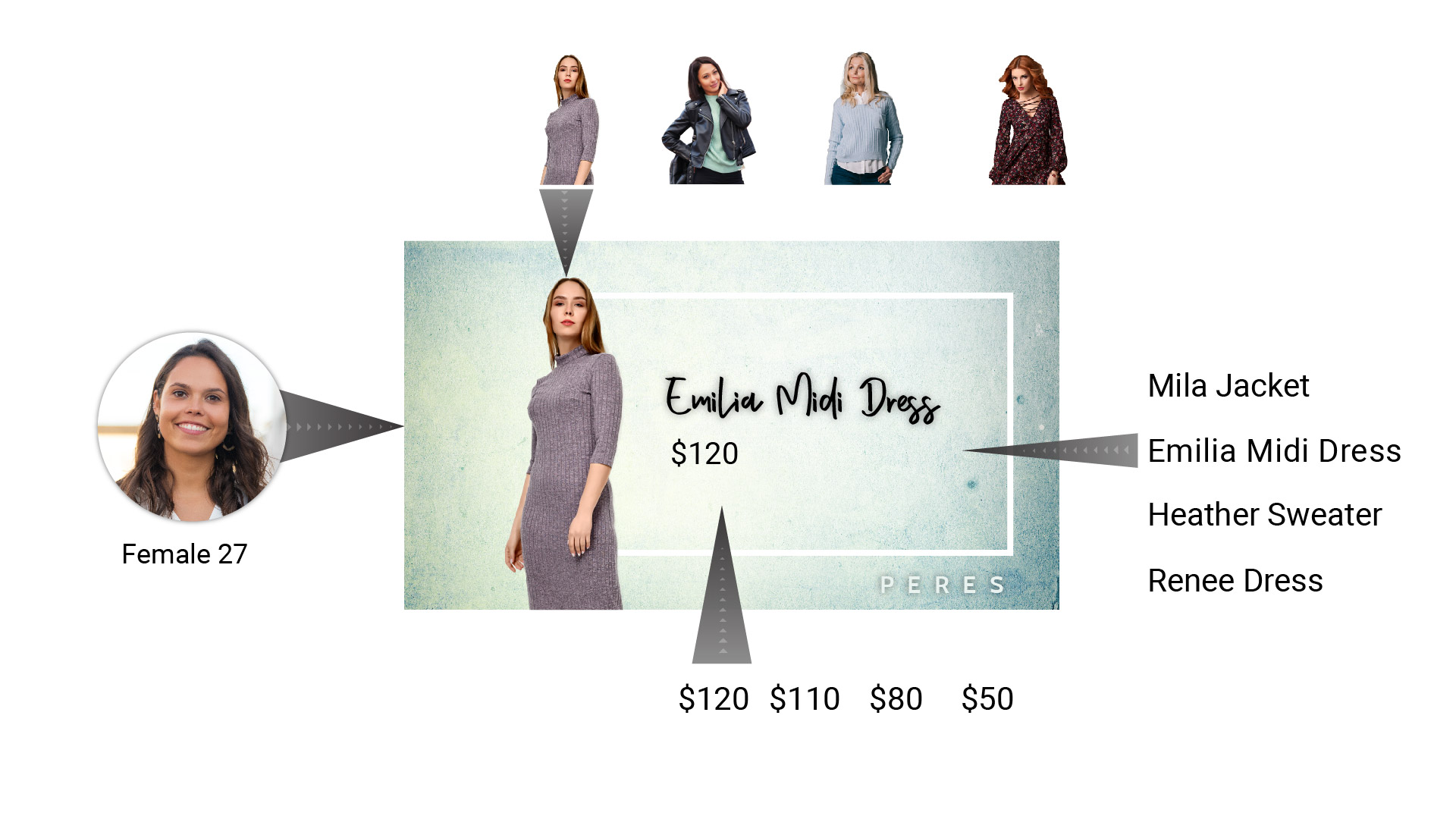

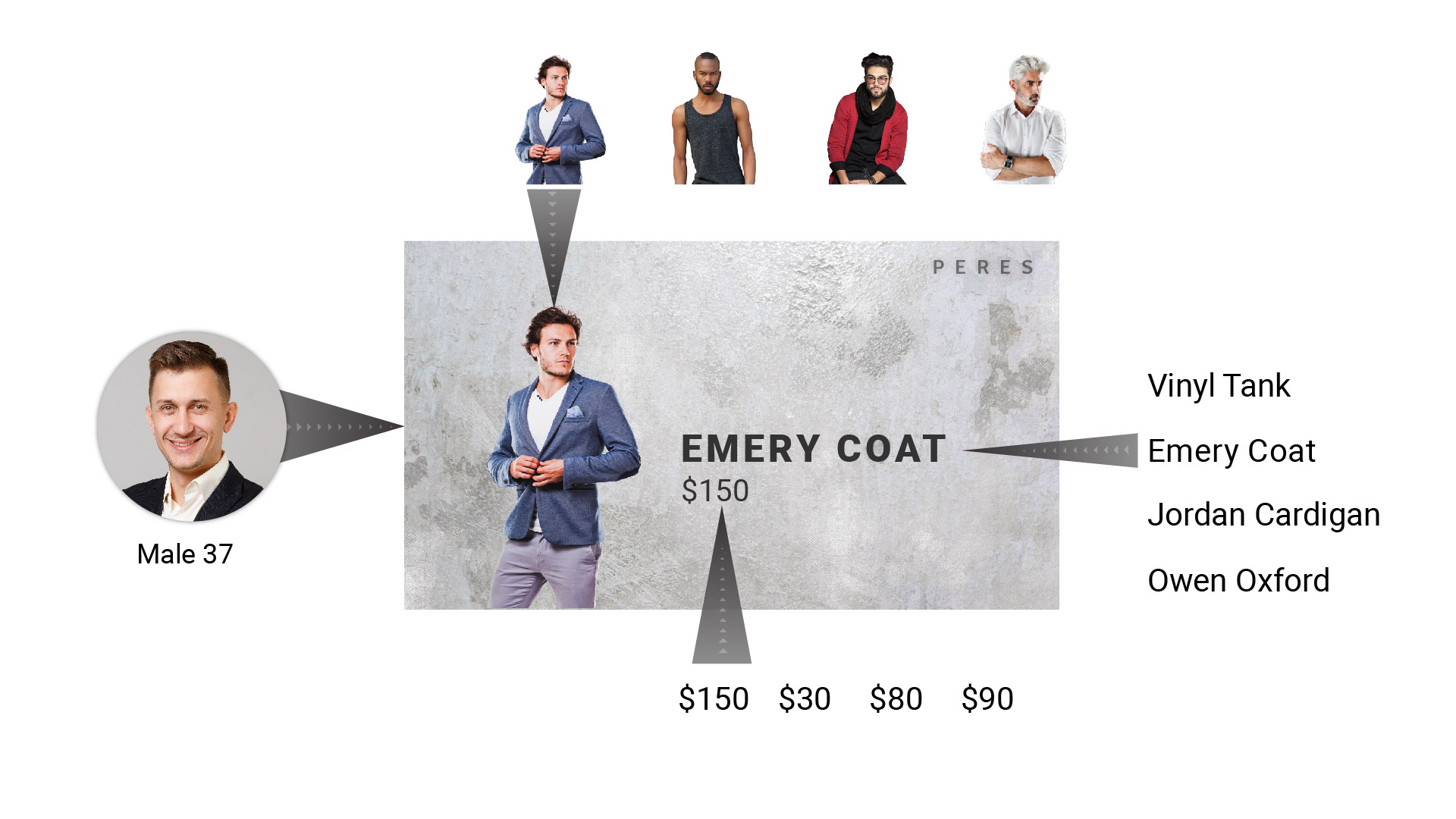

Video-analytics systems detect unique characteristics of viewers of digital signs, such an individual’s age and gender. At the most basic level, this means digital signs can display different advertising for a 27-year-old woman and for a 37-year-old man. These systems use anonymous facial detection involving a camera that recognizes features of the viewer. This alerts the digital sign’s content-management system (CMS) to trigger specific content based on who is currently looking at the screen.

We live in an information- and advertising-driven world in which screens are everywhere. Those screens will command our attention only when their content is relevant to us and they respect our privacy. Video-analytics technology drives better results for advertisers by delivering a more engaging experience for their unique customers.

For signage to drive results, relevancy matters—no matter if the signs show advertising or internal corporate messaging. We see so many signs throughout the day that we’ve gotten great at tuning most of them out; however, a message targeted to “people like me” has a greater chance of inspiring “people like me” to take action.

A Retail Scenario

Imagine a clothing retailer that serves men, women and children of every age. This store carries everything from business- casual clothing to activewear. It has digital signage at the store entrances, and it is investing in video analytics so as better to drive relevancy.

For its first generation of digital signs, the in-store marketing team creates a playlist of eight ads based on current trends, promotions and historical point-of-sale (POS) data. Relevancy isn’t one-to-one; rather, it’s based on great predictions combined with what has worked in the past. Adding video analytics to the signage rollout would increase relevancy, but the playlist would have to have more than eight ads.

All video-analytics systems detect age and gender. So, the retailer decides to target age bands of five years. It divides its customers into age bands of 15 to 20, 21 to 25, 26 to 30 and so on, culminating in a 60-plus band. This breakdown yields 10 unique age bands per gender, equating to the store requiring 20 ads rather than eight.

As facial-detection technology improves, systems are detecting a larger number of unique details, including racial or ethnic characteristics. Some software can rank the viewers’ moods and assign a score to how happy they appear. The aforementioned 20 ads could turn into hundreds of unique designs from the creative team. Video-analytics systems are improving by using machine learning (ML) and artificial intelligence (AI), but, even so, they sometimes get it wrong. Some content creators build generic ads into their playlists, which to help catch the viewer’s eye. Thus, the creative team isn’t just making 20 or more static images for their screens; rather, they’re doing motion graphics and rendering for more content than ever—all with the idea of delivering an improved in-store experience.

This creative workflow doesn’t have to be tedious. Templates are a great solution to utilize, when possible. What’s more, quite often, the age- and gender-specifi ads only entail product and pricing changes; the overall design of the screen can remain the same. A volume of images or videos must still be created, but with only a few unique changes between each creative asset.

Make It Dynamic

Dynamic or programmatic ads are common in online advertising. Our browsers and smartphones know a lot about our online behaviors, including places we visit, apps we use, what we buy and with whom we interact on social-media sites. This information forms a digital fingerprint or profile that drives what ads you see. Ten years ago, this meant that, if you searched for a specific brand of car, you’d see numerous car ads as you browsed other websites. Over time, it has gotten much more sophisticated, and, now, this fingerprint is used predictively to target messages specifically to you.

Assumptions based on patterns and habits help form your profile. Online companies retain large sets of data, and that data drives those assumptions and discovers similarities between people. Numerous digital doppelgangers are out there for each of us. When someone with a similar “fingerprint” to yours buys the newest model of noise-cancelling headphones, for example, there’s a good chance that you’ll see those ads, too—even if you’ve never previously expressed an interest in that product. If you show curiosity, the system keeps track of that and learns to show similar people the same ads. ML and predictive analytics work together to show an ad that’s most relevant to you. This process has gotten so good that we might start to become paranoid that our smartphones are spying on us!

Dynamic ads are built in HTML5 code and generated in real time. The ad only exists as a graphical template tied to a database of headlines, text copy and im ages; “your ad” is assembled as you scroll the page.

This dynamic-advertising approach is making its way to video-analytics systems and digital-signage content. Because facial detection is anonymous, these dynamic ads won’t be as personal on public signage on as they are on our own devices. And that’s good, because this technology will fail if it crosses the boundary of violating our privacy and anonymity. The goal is simply to drive higher relevancy by offering more unique messages.

Dynamic ads allow for many more ad variations versus creating and uploading unique images or videos. They also allow for testing to see which messages perform best. Advertisers rely on testing so much that it’s built into online dynamic ads.

Anyone who claims to have the secret to creating the perfect ad message or viral video probably shouldn’t be trusted. The best advertisers have always used a blend of street smarts, market testing, educated guesses and pure luck. There’s no magic formula that works every time. Online dynamic ads generate thousands of ad variations, and what works best is tracked and displayed more frequently. The machine is learning what ads work best based on actual human behavior. This is also called AI.

Digital signage is following this approach of automated testing, but it’s doing so with less information. Online ads track if they succeeded in getting that individual person to take action; by contrast, digital signage can’t close that loop entirely. However, dynamic ads can keep track of what visuals commanded the most attention. The systems can keep aggregate data about which demographic segment looked at the screen the longest, and they will use that data to choose ad variations for future viewers.

By having large data sets of product images and headline variations, thousands of unique ads are possible. ML removes the guesswork and delivers the right message, to the right segment of people, to capture their attention.

Dynamic ads, whose creation com bines graphics, video and data, require programmers and designers to work together with marketing teams and data specialists. And, in creating them, the team can marshal far more data than the video-analytics system reports. The team can use weather, sports scores, traffic and social-media trending topics, too. In short, dynamic ads can leverage any source of data to improve their relevancy and increase results, including point of-sale-metrics. I would argue that live, in-store video analytics combined with outside-the-door data represents the future of retail advertising.

Where To Start

Video analytics and facial-detection systems generate a lot of interest at AV and retail trade shows. Content creation and dynamic programming don’t generate as much attention, because they’re “the hard part.”

Retailers are looking to create experiences to lure people off their ecommerce couches and entice them to shop in real life. In-store AV is driving those experiences with videowalls and experiential content.

Making this content unique to the individual—to “people like me”—will improve in-store experiences and drive results.

For more digital signage content from Sound & Communications, click here.