Consumer expectations are in flux. How can immersive display technologies help forge a stronger bridge between physical and digital spaces?

As we look at digital signage, public displays, point-of-sale (POS) experiences and other immersive-experience technologies, it becomes clear we’re at a tipping point. Consumer behaviors are changing at an unprecedented rate—and not just because of the novel coronavirus (COVID-19.) Today’s consumer expectations are in a state of flux, continually being reshaped by the retail technology that enables convenient, personalized experiences driven from our mobile devices.

Now that humans can easily socialize, shop and play across the digital and physical worlds using their mobile phones, a precedent has been established: It should be this way for everything we experience in our daily lives.

Immersive digital-display technologies are fast becoming one of the most critical platforms for forging a stronger bridge between the physical and the digital spaces.

Go Big Or Go Home

Let’s look at the big picture. Over the past 18 months, we’ve watched the display industry go through some interesting disruption. LCD and LED displays are dropping in price, OLED production is scaling up and the cost of a 140-inch 4K laser projector is now well within competitive reach of the price of a single 65-inch OLED display. On top of that, at the CES exposition this past January, manufacturers such as LG, Samsung and TCL trotted out their next-generation, room-sized solutions built on 8K microLED and Mini-LED technology. Each of those technologies is on the roadmap to compete with, and ultimately replace, OLED over the next decade.

Is It XR Yet?

We’ve reached the point at which display technology is flexible enough—both figuratively and literally—be used to transform every surface of a physical space digitally. If you use the right type of technology and content, any ceiling, wall, window and floor can be imbued with a digital overlay. In today’s bright-and-shiny speak, we would classify this ability as extended reality (XR), which is the umbrella term for technology that falls into the areas of virtual reality (VR), augmented reality (AR) and mixed reality.

Admittedly, the use case for VR as part of public-venue experiences remains to be seen; however, AR has gained traction, albeit with the key limiting factor that it requires some type of mobile device to project the digital overlay into the physical realm. So, until all of us have cool AR glasses, we’ll have to continue to rely on the most ubiquitous solution: our mobile phone.

Reliance on the mobile device comes at a cost because an app or mobile web applet has to be downloaded or accessed in order to bring these types of experiences to life. That can be problematic because, as the number of apps in app stores continues to grow, people have become more entrenched with their favorite apps. On average, users are downloading fewer apps than ever before. Indeed, looking at Comscore’s 2019 report on the state of mobile markets, the number of users who downloaded zero apps went up from 51 percent in 2017 to 67 percent in 2019.

Hybrid Solutions

It’s possible to create the immersive, augmented experiences we get on our mobile phone, but without the phone. It can be done by using a hybrid strategy of combining projection-mapped AR with various arrays of transparent digital screens or large-format screens to tile over walls, ceilings and floors. This creates room-scale interactive surfaces that become the visualization framework for life-sized digital overlays into physical spaces.

One of the biggest technology disruptors we’ve been tracking is Lightform. This unique content-authoring platform aims to democratize the creation of interactive, room-scale, projection-mapped experiences. For years, it was a very expensive and complex proposition to project AR experiences. It required specialized training, as well as the use of special hardware and software, to knit together immersive environments. The Lightform folks disrupted the status quo by fully automating the content-creation process and integrating it into a powerful projection system with intuitive design tools. This allows designers and artists to scan a room effortlessly and immediately begin to build content in the physical space using a combination of computer vision and content-creation software.

Sound Matters?

Now that we have the room-scale XR framework figured out, let’s talk about the haptics and hyper-directional audio environment. Tactile and auditory sensory enhancements as part of an immersive, digital and physical experience are equally critical as visualization components.

Two startups to watch are creating products that employ audio in very different ways. The first is Open Source Business Inc., with its product currently in stealth mode. Code

named “Audioflash,” the solution was originally developed to help the sight-impaired navigate the world; now, however, it’s aimed at retail to deliver hyper-discrete audio messaging perceptible by only a single, targeted person. In the retail use case, as part of an in-aisle immersive digital experience, it could send one-to-one personalized messages to multiple targeted individuals. In essence, different people standing beside each other could all hear their own, unique message because the proprietary technology can be “aimed” directly into a single individual’s ears.

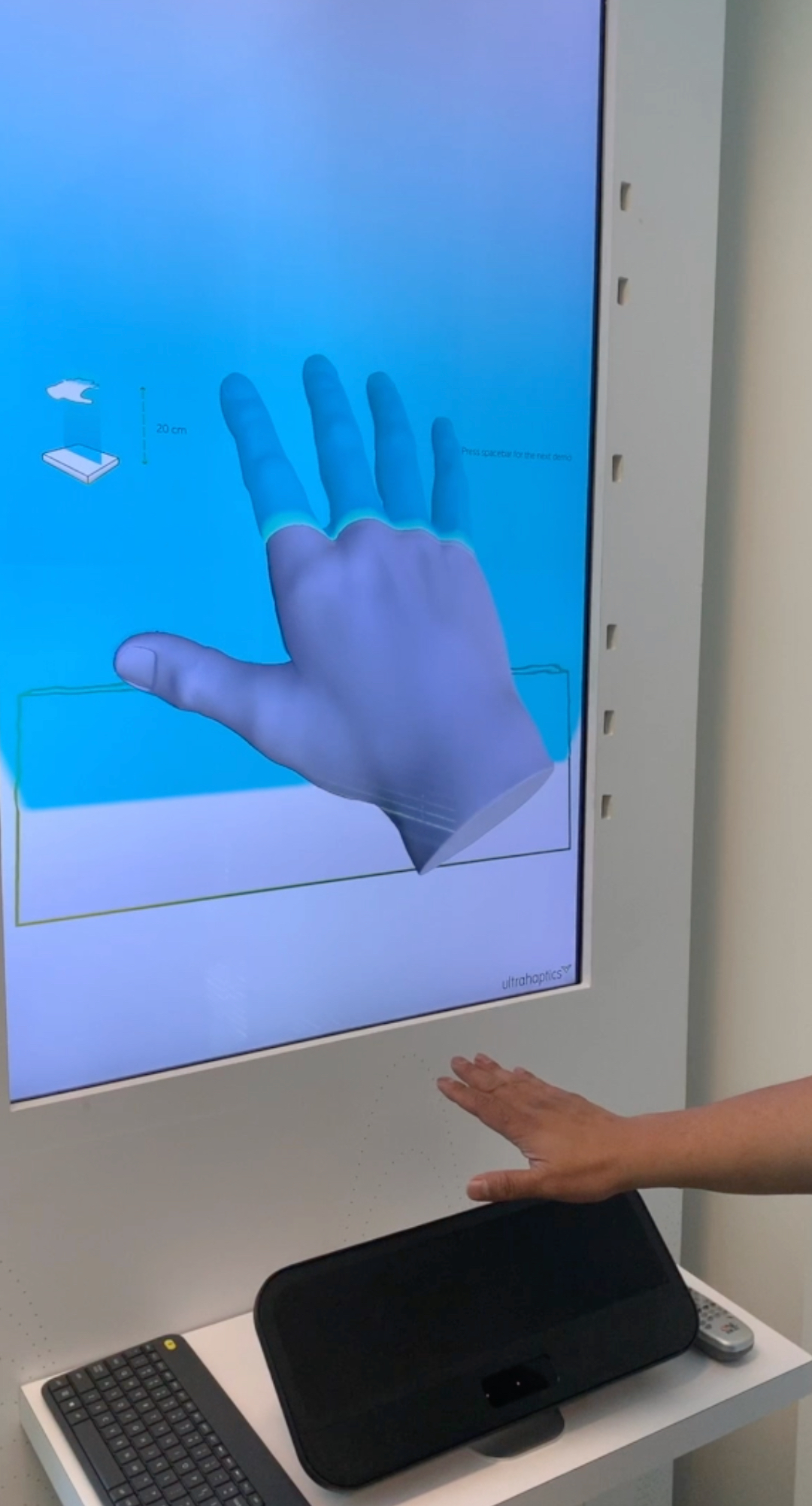

The second startup, also worth checking out, is Ultraleap. The company is focused on engineering mid-air virtual haptics-control systems using ultrasound transponder arrays that employ the company’s patented algorithms to trigger sound wave “pulses” aimed at the same point in space, but at different times. The result is the sensation of touching a physical object floating in midair.

Imagine creating an experience in which a digital avatar appears and the midair haptic sensations tap you on the shoulder to get your attention, or having the digital avatar beam customized “whisper” messages to the ears of potential shoppers as they walk down the aisles.

Next-Generation Gesture Controls

One of the most exciting trends in touchless interactivity is Google’s Project Soli. The technology uses a miniature radar chip to interpret physical movement at various degrees of scale—from subtle movements, such as the twist of a finger, to more dramatic changes in body position. One of the first use cases was with Google’s latest Pixel phone interactions. First, there’s “presence” sensing, whereby the radar chip can tell the phone to do things like turn off an alarm, activate the facial-recognition capability and turn on the display as you reach for the phone. Second, there are the mid-air gestures, whereby the radar chip can use small hand-waving movements (like air swiping) to control music playlists by advancing to a song or going back to the previous song in a playlist.

You’re probably thinking, “Haven’t air gestures been around for a while?” The answer is yes, they have. This time, though, things are different. The earlier gesture technology—like Xbox Kinect cameras and Leap Motion controllers—failed because they were designed for onscreen game interaction (e.g., waving arms or dancing) as opposed to using accurate, fine motor movements for direct manipulation of onscreen user interfaces (UIs). As this technology continues to make its way to other mobile electronics, consumers will learn this type of interaction; accordingly, they’ll expect it to be part of all future immersive-display experiences.

Is it possible for immersive, multi-sensory digital experiences to scale globally to 100 locations, 1,000 locations or more? The answer is a big, fat “maybe.” Although installations and operational support can always scale based on available resources and technical expertise, the ultimate challenge is in the management and distribution of digital assets across the enterprise.

State-of-the-art digital-signage content-management-system (CMS) companies are well suited to handle mainstream digital content management and distribution tasks, but the newer types of assets created for projection-mapped systems, machine-code-driven actuators and customized audio segments are too new to be part of the supported file types. Over the next 12 months, we should see a much higher adoption rate and greater support for those kinds of files as CMS providers begin to adapt to XR-related content trends.

Science-fiction fantasies of humans interacting with virtual experiences—you know…like what we’ve been seeing in the movies for decades—are very close to becoming a reality. In a world where standard AV-integration services for small to medium rooms are taking a back seat to do it yourself (DIY), these new technologies offer firms an exciting, clearly growing space to pursue in the future.

To read the latest edition of our IT/AV Report, click here.