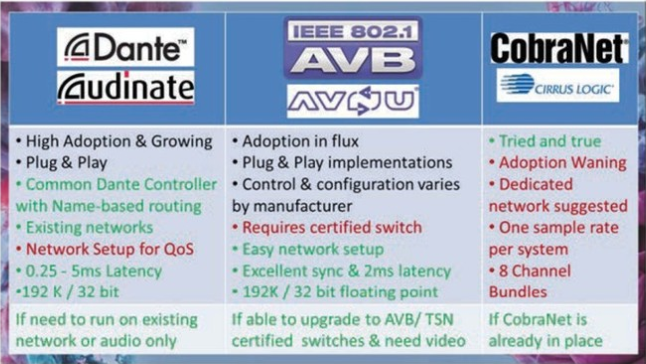

In Part 1 of this article, we discussed the fundamentals of the OSI Model for networked systems as it related to audio-over-IP (AoIP), along with protocols for priority, timing and sync. Now, we’ll dive in and look at three common methodologies—their strengths, challenges, specific capabilities, when to use which and where to learn more.

CobraNet: ‘Tried And True’

In 1996, Peak Audio launched CobraNet, which was quickly adopted by large-scale, high-profile applications, such as the 1997 Super Bowl Halftime Show and Disney’s Animal Kingdom. CobraNet is now owned and supported by Cirrus Logic.

CobraNet Specs

CobraNet is a combination of software and hardware with a network protocol that is licensed by manufacturers for use in their equipment. This is a layer 2 protocol that’s compatible with standard network infrastructure. CobraNet supports up to 64 audio channels in and 64 channels out, and sample rates from 48kHz to 96kHz. Routing is grouped into either four or eight channels per bundle, depending on sample rate and latency. Adding VLANs can facilitate increased channel counts for larger systems.

CobraNet Syncing

CobraNet uses a “Conductor,” the equivalent of a Master Clock, to send out a “Beat packet” for the entire system, requiring the sample rate to be consistent across the network. Clock accuracy is 10µs for channels that originate on the same switch, and longer for devices connected to different switches. Latency within CobraNet is fixed and user-definable to 1.33ms, 2.66ms or 5.33ms across the entire system. Higher sample rates and lower latency require a tradeoff in lower channel counts.

System Design For CobraNet

A dedicated network is highly recommended, but it’s not required for CobraNet. Additional network traffic might lead to unpredictable audio performance. VLANs are an option to segment the audio traffic from the other network data. Many CobraNet devices offer primary and secondary ports for redundancy. Don’t use those ports to daisychain between devices. The redundancy provides for automatic cutover in case of network failure.

There are some excellent design resources and whitepapers available on the CobraNet Network Design Support page. Two software items to look for include CobraCAD for network design assistance and Discovery Utility (“Disco”), which is used for monitoring, troubleshooting and maintenance. Adoption of CobraNet for new implementations is declining. However, its longstanding, broad utilization contributes to current market demand for CobraNet products to support and expand existing systems.

AVB/TSN: ‘It’s Not A Protocol, It’s A Standard’

Around 2012, a group of AV and IT companies got together and formed the Avnu Alliance to create a new method for transport of audio- and video-over-IP. Their objectives included low latency and prices, along with interoperability between manufacturers, in an open standard that was available to anyone. The Avnu Alliance worked with the Institute of Electrical and Electronics Engineers (IEEE) to look at the various standards already in place to move audio and video across a network (such as DiffServ, QoS and PTP). They pulled together those standards into a single standard called Audio Video Bridging/Time Sensitive Networking, which is more commonly known by the acronym AVB/TSN, or just AVB.

System Design For AVB

AVB does not require a dedicated network or a VLAN, and it can run audio, video and control alongside other traffic on the same network. In this scenario, media traffic is prioritized over other traffic as part of the AVB definition. When non-audio bandwidth consumption is high, audio delivery does not suffer, because the audio is prioritized over the non-media traffic. AVB requires AVB-certified network switches, which were, until recently, relatively scarce and expensive.

AVB Signal Prioritization

Media prioritization is accomplished using something called a stream reservation. Stream reservations are network pathways that dedicate up to 75 percent of available bandwidth for passing media. A queue or buffer in the switch holds non-AVB signals and gives them priority of DiffServ traffic. With AVB, there is no need to set up QoS, because priority is completed by activating an “Enable AVB” function in the switch (if not already enabled by default)—that potentially saves setup time.

AVB Specs

AVB supports multiple simultaneous sample rates up to 192kHz with 32-bit floating-point bit depth. Different devices support different channel counts. Up to 400 simultaneous channels can run on a single network, with some manufacturers reporting counts larger than 512. AVB provides for guaranteed sync of less than 0.5ms on a gigabit network with latency of 2ms up to seven hops. Some manufacturers spec their equipment with substantially lower latency. Audio, video, control and any other payload data are all supported on the same network and within AVB/TSN.

Dante: ‘The New Kid Who’s Not So New’

In 2003, a team of former Motorola employees came together to form Audinate. They had the objective of creating a proprietary system of software and hardware for networking audio, which became Dante. Dante is currently implemented by more than 350 manufacturers, in more than a thousand products, with more than 30 million channels of Dante network audio in use. Adoption has been widespread, including significant events for Pope Francis, Paul McCartney, Bruce Springsteen, Elton John, Bob Dylan, Kenny Chesney, The Foo Fighters and many others.

System Design For Dante

Using a proprietary methodology, Audinate manufactures the Dante networking hardware that’s embedded in other audio manufacturers’ hardware. Audinate also developed Dante Controller, which is the free software that’s used across all implementations of Dante for routing, system configuration and management. That makes working with Dante consistent across all manufacturers, and it ensures that all Dante-enabled devices, independent of who makes the audio hardware, are plug-and-play compatible.

Unmanaged network switches are suitable when creating a dedicated Dante audio network. If multiple types of traffic will travel on the same network, then a managed switch will provide additional flexibility for establishing priority with DiffServ. When connecting Dante-enabled devices to a network, DHCP automatically assigns IP addresses, and they automatically appear in the Dante Controller software. Dante Controller can be run on any computer connected to the same network as the Dante-enabled audio hardware. In Dante Controller, the system administrator can assign devices and individual IO channels easily identifiable labels, in plain English. They can also quickly route individual audio channels between sources and destinations using a cross-point matrix in the Controller software. Dante refers to channel routing as “subscription.” Although Dante uses UDP for speed, Dante Controller verifies the quality of the subscription across the network at the application layer by sending data back from the receiving unit to the transmitter.

Dante Specs

Depending on the specific device, Dante supports up to 512 channels in and 512 channels out, simultaneously, which can be routed individually. Sample rates on a Dante system can run up to 192kHz at 32 bit, with multiple sample rates existing together in the same system. However, sample rates must match between “subscriptions,” because Dante does not provide for sample-rate conversion within the methodology itself.

Dante Controller allows you to adjust latency on a Dante system from 150μs to 5ms. This is set in Dante Controller at the receiver; it’s user adjustable, and it remains constant. Recommended minimum latency settings are typically dependent on network size. For example, 150μs is often used if there is only one switch in the system. It’s 250μs for three switches. 500μs is typically a safe option on gigabit networks with up to five switches. And so on from there. When a subscription is established, communication between the receiver and transmitter ensures that latency is set high enough.

Many Dante devices provide a secondary port for redundancy. That allows for automatic cutover to a secondary network in case of a failure on the primary network. The secondary port should only be used for redundancy—not for daisychaining devices.

Expanding The Capability Of Dante

In addition to Controller, other software options are available to expand the capability of Dante. Dante Virtual Soundcard provides individual channels from audio applications running on a connected computer. Those could be digital audio workstations (DAWs) such as ProTools or Cubase. Virtual Soundcard allows a DAW to live on a network and record from, or play back, sources straight onto a Dante network. For example, if a live sound production that uses Dante wants to record shows to multitrack, or perform a virtual sound check, the production can just connect the computer running the DAW to the network—no additional hardware IO interfaces required.

Dante Via is another optional software, which enables a standard Apple Mac or Windows PC to function as a Dante device, placing the computer’s primary audio output onto the network. One application would be to take the audio playback from a PC and distribute it throughout a facility, all without having to run additional lines for audio.

Dante Domain Manager

Until recently, all Dante devices connected to a network automatically appeared in Dante Controller, and they could not be isolated for individual management or sub-systems. In short, every instance of Dante Controller on a network had access to all Dante devices. Additionally, routing Dante across multiple LANs was not readily available. Dante Domain Manager, which was announced at ISE 2017 and recently released, allows a Dante system to be segmented into multiple domains to simplify management and connect across multiple LANs. It’s particularly beneficial for campus-wide deployments and other large ones.

Audinate provides free training and certification on Dante at its website, and it provides enough information to facilitate the design and implementation of a basic system relatively quickly.

Other AV-Over-IP Systems

There are many other AoIP (or audio-related) networking methodologies supported by various manufacturers and independent proponents. Some examples include ANET by Aviom, QLAN by QSC, EtherSound, RocketNet, Ravenna and HiQnet by Harman (the last is for control only and doesn’t network audio). With so many players in the field, utilizing the same underlying network standards, wouldn’t it be great if there were a way to have the multiple options all talking to each other? That’s where AES67 comes into play.

AES67 is an open interoperability standard that’s designed to get the methodologies to work together. AES67 is a work in progress that’s being adopted by Audinate, Avnu Alliance and many others. With that said, AES67 isn’t the only method to bridge between methodologies. Most devices that support AVB/TSN and Dante can bridge internally between protocols. For example, many professional audio mixers have card slots for installing Dante cards and an AVB card. Those cards are routable IO, just like any other analog or digital connection on the device, thereby enabling the exchange of audio signals between methodologies when routing through the mixer.

With all the focus on the network, it’s easy to lose sight that a network is just a tool…a means to an end, rather than an actual end. The protocols themselves don’t matter as much as what they allow you to do with the equipment that’s connected to them.

The next time you’re looking at a networked audio system, consider starting with the rest of the system and then working toward the network methodology. Although CobraNet, AVB and Dante all have strengths and limitations, they’re each flexible enough to meet a variety of audio demands. If the right equipment is specified, then there’s a very high likelihood that the available network options are up to the task at hand.

To read more from Sound & Communications, click here.